Autonomous Drone

Robot Autonomy Course Project

The robot autonomy project walked me through the entire process of developing an autonomous drone which can fly through dynamic environment and actively avoid obstacles. The project is divided into four parts, covering Trajectory Generation, Visual Odometry, SLAM, and Dynamic Path Planning, respectively.

Professor Nathan Micheal designed the project so that the students can get plenty hand-on experience and knowledge about Robot Autonomy as a whole. The the goals of the project are: using stereo camera video and IMU data to locate the drone in an unknown environment, to map the environment, and to guild the drone through the environment without hitting obstacles. To scale down the project, the path planning part is decoupled from the SLAM task. The path planning and controlling of the drone is in fact based on motion capture system but not visual odometry and IMU localization result. With the super accurate and expensive motion capture system, my teammates and I finally got the drone to fly over the designated area in FRC (field robotics center) without crashing!

Visual Odometry

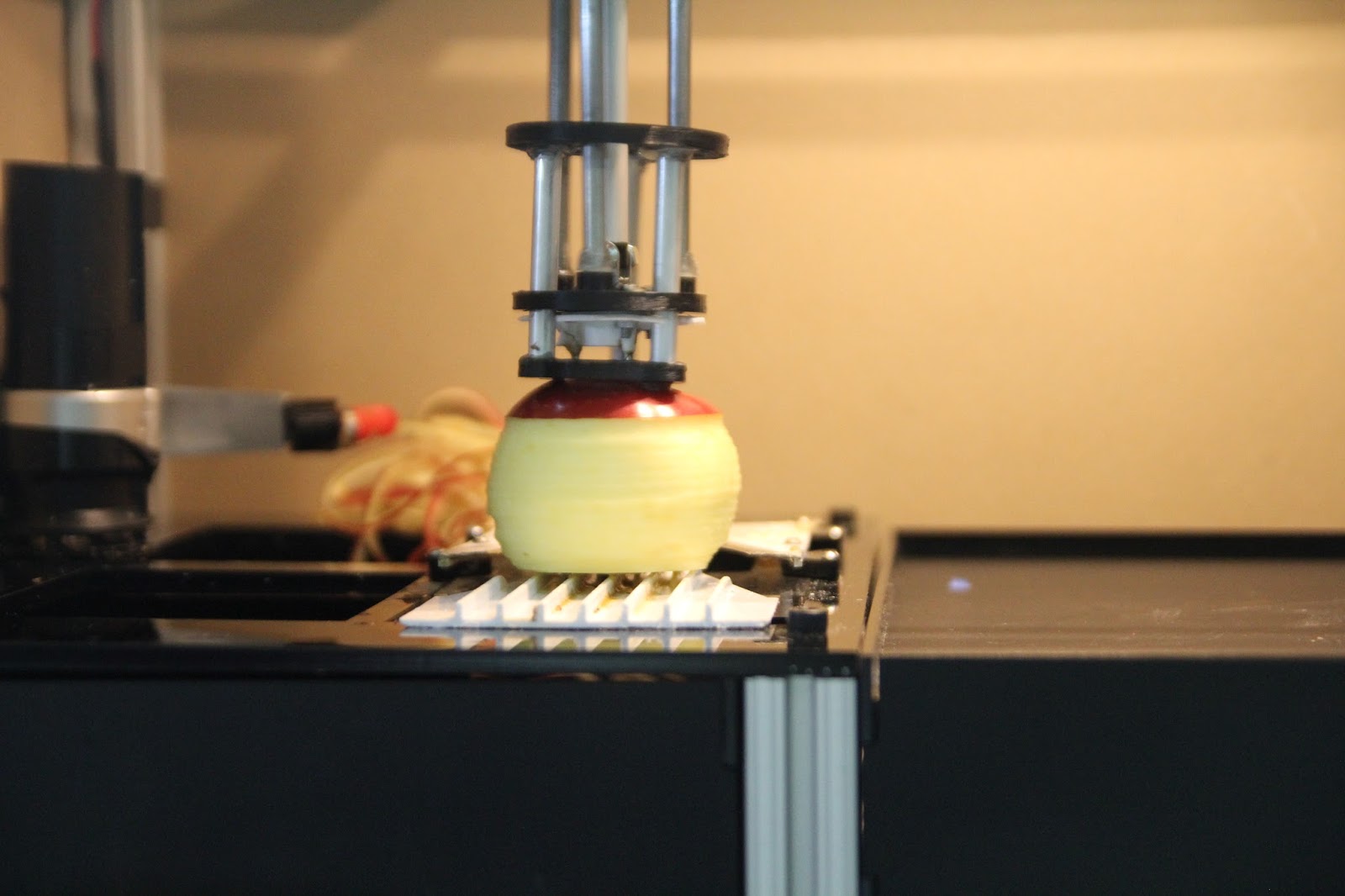

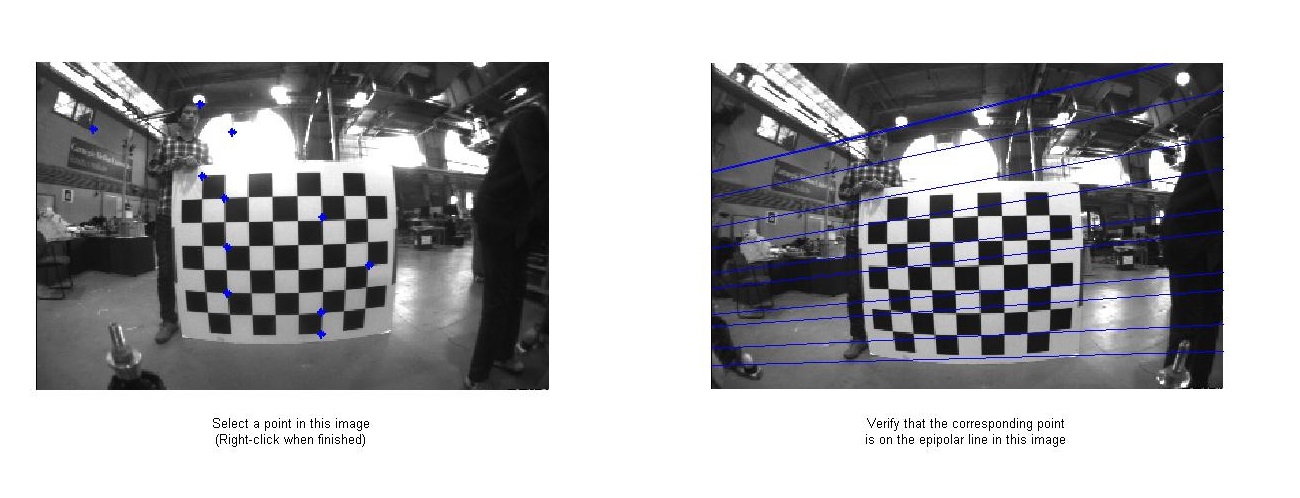

The first step is always camera calibration. As I have done this for Computer Vision project before, it is straight forward this time. Using CV toolbox in Matlab and photos of checkerboard, I got the intrinsic matrices and the translation matrix between the stereo cameras.

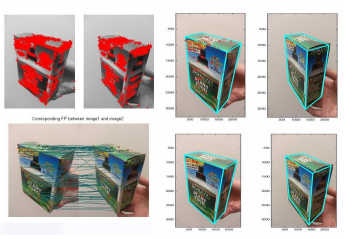

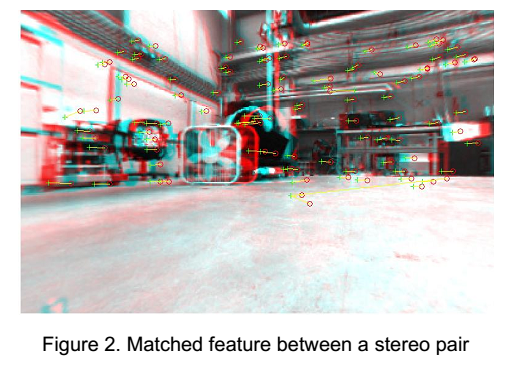

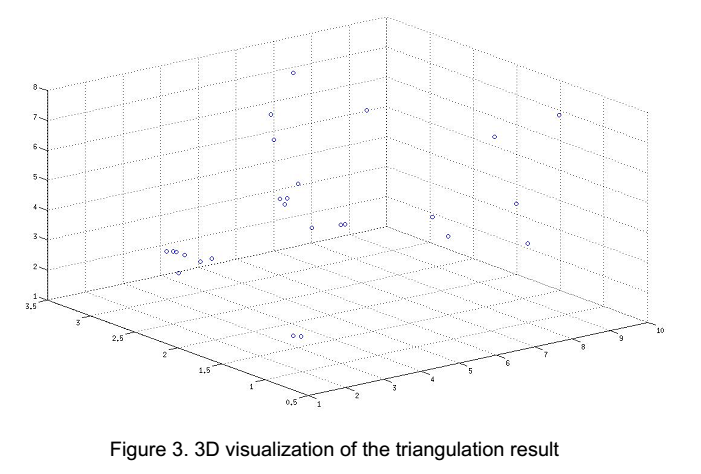

Then I did triangulation on detected matching features in both the stereo cameras(We tried Harris conner, SIFT, and a couple other features, and settled with SIFT), estimated their 3D locations in each frame. Then the difference in the 3D locations of the shared features in neighbor frames tells about the movement of the stereo camera - which is fixed on the drone. This is almost exactly the same problem I tried to solve in the Computer Vision project, so called PnP problem - Using 3D coordinates of objects and their coordinates in the camera images to determine the 3D coordinate of the camera itself.

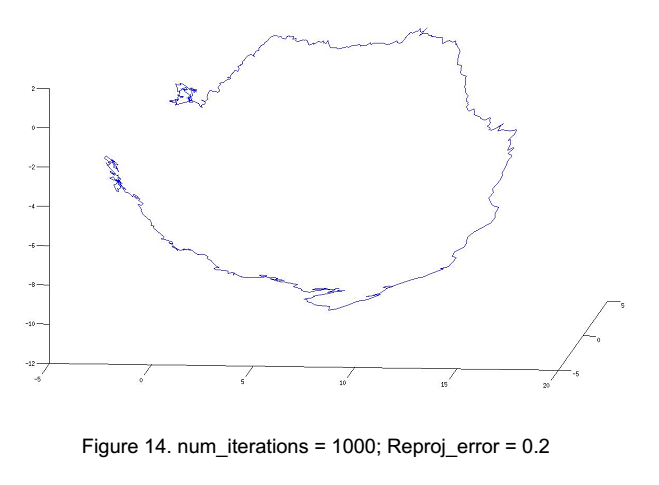

Calculating re-projection between frames is over-determined because there are usually more than 6 pairs of matching features between two frames. Therefore I used RANSAC to reject the outliers before calculating the translation matrix. The localization result using solely Visual Odometry doesn't look very satisfying but none the less interesting -

SLAM

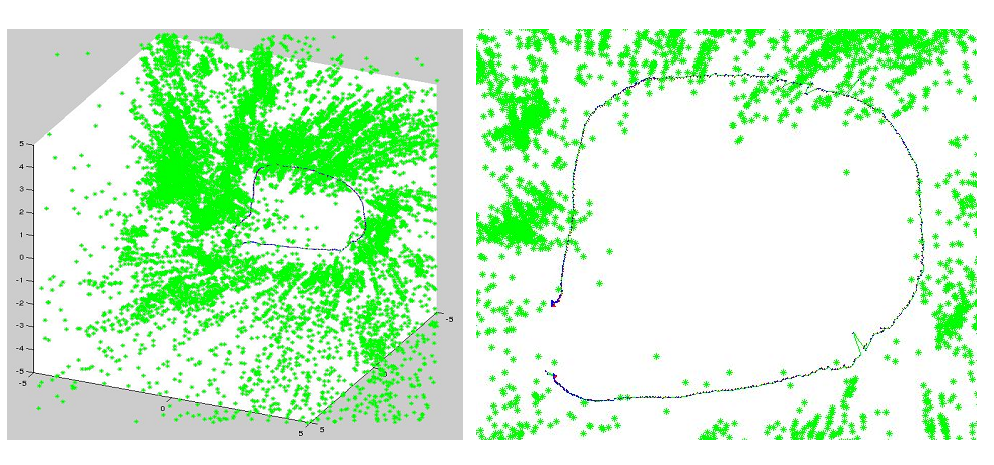

For the simultaneous localization and mapping, the state-of-the-art factor graph theory is introduced and applied. A factor graph takes multiple observation sources into account, and combine them with different levels of belief to make predictions. The prediction of robot location is represented by a probability function, taking into account all the past observations, and will be adjusted based on future observations as well. The level of belief of an observation is modeled by a variance. Thus ideally the final SLAM result will be smooth and reliable.

The professor directed us to a factor graph library GTSAM and we implemented the SLAM algorithm in MATLAB. The result is clearly better than using purely visual odometry data and naive translation estimation!

Trajectory Generation

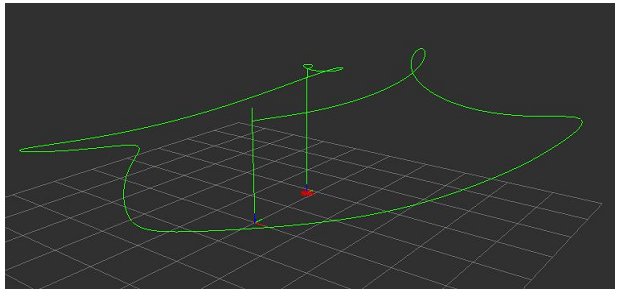

We implemented the algorithm for generating bounded acceleration trajectory along multiple way points. The algorithm minimizes energy cost by minimizing the squared control inputs, calculates the optimal polynomial that fits the required way-point velocities and times intervals between way-points.

Also in the state machine designed to execute the flight, the smooth constrains on acceleration/thrust are enforced everywhere. Therefore when transitioning states, where there is possibly a gap between desired positions and velocities before and after the transition, we created intermediate control parameters to make sure the step sizes of change in control are under a certain limit.

The trajectory and state machine are tested in a simulation environment provided by Professor Micheal.

Path Planning

For this part, the motion capture system provided very accurate coordinates of the robot and obstacles. We used A* algorithm from MATLAB and D* from SPBL library to perform the path planning.

Thanks:

My teammates: Wenda Wang, Sugandha Sangal and Alvin Chou. And Professor Nathan Micheal!!